ABOUT

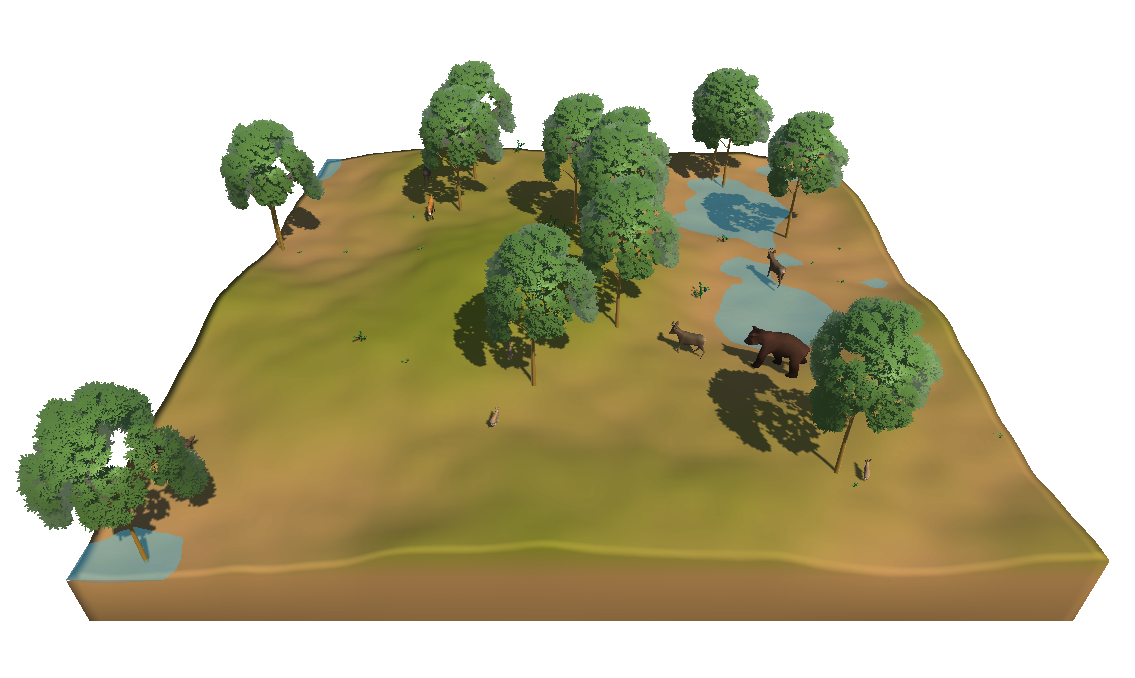

Hares and Bears is an ecosystem simulation in Augmented reality (AR). With the application you can observe a virtual ecosystem containing the four animal species hares, foxes, bears and deers. The animals will need to eat and drink to survive. What the animals eat depend on their respective species, they can either eat the plants growing within the ecosystem (grass, blueberries and lingonberries) or by hunting other animals. The animals will also reproduce. As a user you have some control over the ecosystem as you can spawn in new animals to explore what it takes to create a balanced ecosystem. The project was developed in the course DH2413 Advanced Graphics and Interaction at KTH in the autumn of 2021.

Motivation

The main motivation behind the project was to explore the area of what the future of STEM learning might look like, specifically within the area of biology. We wanted to develop an AR application that helps younger students (7-9 year olds) learn about the food chain and how the balance in a simple ecosystem works. We also wanted to learn more about AR interaction and development.

Goals

Learn more about how to develop AR applications in Unity

Create an interactive AR application that can help our target group to better understand the food chains and the importance of a balanced ecosystem

Explore and learn more about procedural map generation

Challenges and learnings

One of our initial challenges was to understand how the plane detection worked in the Unity AR foundation, as well as adapt the size of objects (the ground plane as well as the animals) to make them a reasonable size while being viewed in AR. We also had some challenges with implementing the navigation of the animals, especially regarding water finding, where the initial implementation caused the application to lag. This was solved by only saving and searching for the border points of the water when an animal got thirsty which greatly improved the performance. There were also some challenges with the hand tracking as it required the learning of a new library for the person working with implementing this functionality.

We have learned the basics of working with Unity’s AR Foundation which includes placing a plane/surface and navigating around it in the real world to look at it from different angles through a mobile phone. We have also learned more about how to animate 3D models as well as procedural map generation using an overlapping noise technique. Furthermore, we have experimented with hand tracking in AR by implementing an animal feeding functionality.

Related work

Equilinox

Equilinox is a game where you can create and manage your own ecosystem. You can place plants and animals and observe as the ecosystem grows. If the ecosystem flourish the animals can earn the player points which can be used to purchase different in-game resources.

We took some inspiration from this game, and if we were to continue to work on Hares and Bears our envision would be to have a richer variety of animals and plants, just as in Equilinox. One difference between Equilinox and our project is that our ecosystem is viewed using AR with the main goal is to experiment and see what it takes to create a balanced ecosystem as well as observe the ecosystem that you have created. Thus we did not want to have functionalities as currencies which are more gameplay focused.

The Table Mystery

Table Mystery is an AR collaboration educational game with the focus of teaching younger students about the periodic table.

This project is quite a lot different from our project as it is focused within teaching about the field of chemistry while ours is focused on teaching within the field of biology. The Table Mystery is also more gameplay focused with a story where students need to work together to solve a problem. The main similarity between this project and our project is that they both use Augmented reality and therefore we thought that it was interesting to read more about it in order to better understand how AR can be used in an educational setting.

Technology

Unity

The project was developed in the Unity game engine, which is a cross-platform game engine. For the AR implementation we used Unity's AR foundation. All group members had previous experience of working with Unity which is why we thought it was a good choice for creating this project. Since none of us had worked with AR before, Unity’s AR foundation was a good tool to start learning about AR development without the need to implement everything that is required in an AR application from scratch.

Blender

Blender is an open source software that can be used to for example create 3D models and animations. Blender was used to create the 3D models of the animals and plants as well as to create some of the textures. Blender was also used for rigging and animating the animals. We choose to use Blender as the group members working with models and animations had previous experience with the software. We also chose to use Blender because it is a powerful open source software.

ManoMotion

ManoMotion is a library which can be used for real time 2D and 3D hand tracking. ManoMotion was used to create a feeding functionality that enables the user to hold their hand in front of their mobile camera to create a virtual hand holding a fruit in the AR scene. The user can then feed this fruit to one of the animals. We decided to use ManoMotion as the hand tracking library as it had a free version available and because it could be used in Unity.

Adobe Substance

We used Adobe Substance Painter and Substance Designer to create textures for some of the animals. We used it because it is a powerful tool for creating textures. So by using it we had the flexibility to create textures suitable for the style we wanted for our application.

GitHub

GitHub is a service which provides hosting for software development as well as version control. We chose to use GitHub for our projects as it makes it much easier for different people to work simultaneously with different parts of the project by having separate branches. We could also use GitHub to plan and divide work between group members using a GitHub project board as well as through creating issues. Furthermore, we use GitHub pages to host our project website.

DEMO VIDEO

THE MAKING OF HARES AND BEARS

GALLERY

TEAM

Max Truedsson

I have had two main areas of responsibility: Models and terrain. Programming wise I worked mainly on the procedural generation, both the logic for generating the mesh and some of the game logic for interacting with the terrain(mainly water). I also created three of the models, a Fox, Hare and a tree. Two of these models were also animated with a total of 10 animations (5 each).

Robin Bertin

I have worked on interaction with AR : plane detection, map placement, hand recognition, spawn on touch. I did the UI to spawn animals and manage the time. I created the plant propagation system and the system responsible for spawning the animals when the map is generated. I, finally, did the system for having the animals eating from the hand through hand recognition.

Erik Westergren

I have mainly worked on the behavior system for the animals and the ecosystem logic. In addition, I also implemented the logic that allows the user to control the time in the simulation. Furthermore, I was also responsible for adding the ability to let users scare animals nearby. I also created the particle effect played when animals mate.

Malin Liedholm

I have been working on creating the plant 3D models and textures for the plants, as well as for the deer and the bear. I have also created animations for the deer and the bear. Furthermore, I have been working on implementing all the animations into Unity. I was also responsible for creating the project website.

Andreas Wingqvist

I have been working on the UI, shaders and on the particle system. For the UI I implemented the spawning of the animals onto the map. I created the shader for the water so it looks like it's moving. I also implemented some particle effects for certain animal interactions. I'm also responsible in creating "The making of HaB" video for this project.